I will leave Bengtsson & Zyczkowski book for awhile to look into dynamical systems and nonlinear dynamics, a topic I covered in my talk in India.

The subject of dynamical system is very broad (either from physics or mathematics), which makes it hard for a beginner (me) to survey the subject. It is only better for me to explain why am I interested in this area. The procedure of quantization often begins with classical (dynamical) systems of which a major interest is in quantizing (a particle system on) nonlinear configuration spaces. Most of the time we treat the nonlinearities intrinsically by adopting the appropriate canonical variables to be quantized (or quantization by constraints). Some of these nonlinear systems can be chaotic classically such as particles on hyperbolic surfaces (see review article “Chaos on the Pseudosphere” by Balasz and Voros or “Some Geometrical Models of Chaos” by Caroline Series) and it is often pondered how such behaviour translates into the quantum regime. My original concern in this topic is mostly how complex topologies of hyperbolic surfaces get encoded in quantum theory. We will defer such discussions to a later time.

What is a dynamical system? There are three ingredients to a dynamical system:

- Evolution parameter (usually time) space

;

;

- State space

;

;

- Evolution rule

.

.

Note that  can either be

can either be  or

or  , for which the former is called discrete dynamical systems where evolution rule is usually difference equation, while the latter is called continuous dynamical systems with the evolution rule described by ordinary differential equation. One can consider to discretize the state space itself to give cellular automata with their update rules (see also graph dynamical systems). Perhaps the most famous cellular automata (CA) is Conway’s Game of Life build upon a two-dimensional (rectangular) lattice whose update rule is given by

, for which the former is called discrete dynamical systems where evolution rule is usually difference equation, while the latter is called continuous dynamical systems with the evolution rule described by ordinary differential equation. One can consider to discretize the state space itself to give cellular automata with their update rules (see also graph dynamical systems). Perhaps the most famous cellular automata (CA) is Conway’s Game of Life build upon a two-dimensional (rectangular) lattice whose update rule is given by

- Any live cell with fewer than two live neighbours die (underpopulation).

- Any live cell with two or three live neigbours live.

- Any live cell with more than three live neighbours die (overpopulation).

- Any dead cell surrounded by three live neighbours live (regeneration).

Fascinating configurations can be constructed by such simple rules. Even simpler is the elementary 1-dimensional CAs for which Wolfram has classified according to their update rules: rule 0 to 255 ( rules). It was proven by Cook that rule 110 is capable to be a universal Turing machine. Note in the above CAs, there are only two states (live or dead). One can generalise the number of states to go beyond two e.g. CA with three-valued state (RGB) can be used in pattern and image recognition (see e.g. https://www.sciencedirect.com/science/article/pii/S0307904X14004983).

rules). It was proven by Cook that rule 110 is capable to be a universal Turing machine. Note in the above CAs, there are only two states (live or dead). One can generalise the number of states to go beyond two e.g. CA with three-valued state (RGB) can be used in pattern and image recognition (see e.g. https://www.sciencedirect.com/science/article/pii/S0307904X14004983).

One can further make abstract the notion of dynamical system as done by Giunti & Mazzola in “Dynamical Systems on Monoids: Toward a General Theory of Deterministic Systems and Motion” (see also here). We will not pursue this but instead mention two other cases normally not classed as dynamical systems.

I would like to add further to the above some dynamical systems encountered in (theoretical) physics that ought to be differentiated from the classes above. First, systems whose equations are given by partial differential equations i.e. those with differential operators of not only with respect to time, but also with respect to space. Most notable example is the Navier-Stokes equation that governs fluids. At this point, one should even mention about relativistic systems whose evolution parameter might not even be separably identified from spatial coordinates. Relatively recently, techniques of (conventional) dynamical systems have found its way into cosmology via long-term behaviour of cosmological solutions and reducing the full Einstein equation (pdes) to simpler ones. See the book of Alan Coley or the book of Wainwright & Ellis. See also the articles of Boehmer & Chan and Bahamonde et al. (published version here). It is interesting to note the author of former article Dr. Nyein Chan was in Swinburne University, Sarawak Campus before. He has probably returned to his home country Myanmar (his story can be read here).

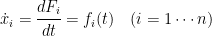

To discuss geometry of dynamical systems, I make extensive use of the notes by Berglund (arXiv: math/0111177). To start, dynamical systems are equipped with a first order ODE describing the dynamical equation:

where  is a function on the state space

is a function on the state space  . Using chain rule, one can rewrite this equation as

. Using chain rule, one can rewrite this equation as

.

.

Note that  can now be treated as a vector field (as one does in usual (local) coordinate-based differential geometry). Vectors field (despite its local coordinatization whose transformation law is known) encodes geometric information of the state space it lives on. The easiest way to see this is the exemplification of the hairy ball theorem by the statement one can’t comb the hair of a coconut. On the other hand, one can do so on the torus (surface of a doughnut). Technically this is due to the nonvanishing Euler characteristic of the sphere( and in the case of the torus, it vanishes).

can now be treated as a vector field (as one does in usual (local) coordinate-based differential geometry). Vectors field (despite its local coordinatization whose transformation law is known) encodes geometric information of the state space it lives on. The easiest way to see this is the exemplification of the hairy ball theorem by the statement one can’t comb the hair of a coconut. On the other hand, one can do so on the torus (surface of a doughnut). Technically this is due to the nonvanishing Euler characteristic of the sphere( and in the case of the torus, it vanishes).

The standard example of a dynamical system comes from mechanical systems (say, one particle obeying Newton’s laws). However Newtonian equations for such systems are second order ODEs. This simply implies that the mechanical state should be a pair of variables, say of position and momenta  forming the phase space

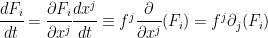

forming the phase space  . Formulating the mechanical system as Hamiltonian mechanics, one can rewrite the Newtonian equations of motion as two sets of first order ODEs known as Hamilton’s equations:

. Formulating the mechanical system as Hamiltonian mechanics, one can rewrite the Newtonian equations of motion as two sets of first order ODEs known as Hamilton’s equations:

where  is the Hamiltonian of the system. It is convenient to rewrite this equation using another algebraic structure known as Poisson bracket which is defined as

is the Hamiltonian of the system. It is convenient to rewrite this equation using another algebraic structure known as Poisson bracket which is defined as

.

.

Then one can rewrite Hamilton’s equations as

.

.

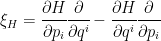

The convenience is that the dynamics is contained in the algebraic form of Poisson bracket. Thus, studying the Poisson bracket structure is equivalent to studying the dynamical structure. One can further ‘geometrize’ this algebraic structure by considering the vector fields on the phase space  and

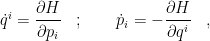

and  and the Hamilton’s equation as

and the Hamilton’s equation as

,

,

where  is a covariant antisymmetric tensor known as the symplectic form and

is a covariant antisymmetric tensor known as the symplectic form and

.

.

The manifold (space) equipped with the symplectic form is known as the symplectic manifold. With the Poisson bracket replaced by the symplectic form, one can simply study the properties of the symplectic form to know about the dynamics. Finding symmetries preserving the symplectic form has become the basis of (some) quantization procedure.

The motivation to study dynamical systems is to learn about chaotic dynamical systems. The word chaos conjures images like the ones below (a favourite picture from Bender & Orszag book and a billiard in )

Source: Bender & Orszag, “Advanced Mathematical Methods for Scientists and Engineers” (McGraw Hill, 1978) Fig.4.23 on page 191.

However, the iconic diagram one associates with chaotic dynamical system is that of the two winged Lorenz butterfly diagram (later), which I thought it had structures. In such a system it was the sensitivity of initial conditions for the orbits traversed that played a characteristic role. The orbits above are perhaps closer to a different concept of the ergodic hypothesis. How sensitivity of initial conditions have been called chaotic is quite interesting. A more mundane name for the whole subject is nonlinear dynamics which is used before the term chaos got popular.

So how does one put some useful handles to such systems with complicated behaviour? One begins by looking for simple solutions i.e. stationary solutions. Recall  and

and  . (Note: at times, I will not write out the indices and should be understood contextually.) A stationary solution is the one that obeys

. (Note: at times, I will not write out the indices and should be understood contextually.) A stationary solution is the one that obeys  i.e. doesn’t change with time. Of related interest are fixed points

i.e. doesn’t change with time. Of related interest are fixed points  such that

such that  ; also called equilibrium points. Points

; also called equilibrium points. Points  for which

for which  are called singular points of the vector field

are called singular points of the vector field  ; also called stationary orbits.

; also called stationary orbits.

We can now explore solutions nearby the equilibrium point  for which

for which

where

,

,

i.e. linearizing the solutions with the higher order terms are assumed to be bounded by some constant. In the linear case ( ), one has

), one has

.

.

Thus, one can see that the eigenvalues of  of

of  will play in the important role of long-term behaviour of the solutions.

will play in the important role of long-term behaviour of the solutions.

To take advantage of this fact, one can use projectors  to eigenspace of

to eigenspace of  to study the behaviour of equilibrium points. Construct projectors to sectors of eigenvalues

to study the behaviour of equilibrium points. Construct projectors to sectors of eigenvalues

.

.

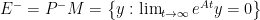

and define subspaces

;

;

;

;

,

,

which are called respectively unstable subspace, stable subspace, and centre subspace of  and they are invariant subspaces of

and they are invariant subspaces of  . With respect to these spaces, one can actually classify the equilibrium points. The equilibrium point is a sink if

. With respect to these spaces, one can actually classify the equilibrium points. The equilibrium point is a sink if  ; a source if

; a source if  ; a hyperbolic point if

; a hyperbolic point if  ; and an elliptic point if

; and an elliptic point if  . Note that one has a richer variety of equilibrium points than the one-dimensional case simply because there are more ‘directions’ to consider in higher-dimensional cases (characterised by the eigenvalues of

. Note that one has a richer variety of equilibrium points than the one-dimensional case simply because there are more ‘directions’ to consider in higher-dimensional cases (characterised by the eigenvalues of  ). To illustrate this, we consider the two-dimensional case with two eigenvalues

). To illustrate this, we consider the two-dimensional case with two eigenvalues  (borrowing diagram from Berglund):

(borrowing diagram from Berglund):

Case (a) refers to a node in which  (arrows either pointing in or out). Case (b) is a saddle point in which

(arrows either pointing in or out). Case (b) is a saddle point in which  . Cases (c) and (d) happen when

. Cases (c) and (d) happen when  and hence giving rotational (or oscillatory motion in phase space). Cases (e) and (f) are more complicated versions of nodes when there are degeneracy of eigenvalues (please refer to Berglund for details). At this juncture, it is appropriate to mention the related concepts of basins of attraction which appear in chaotic dynamics literature. Particular one has the concept of strange attractor, arising from the fact that while

and hence giving rotational (or oscillatory motion in phase space). Cases (e) and (f) are more complicated versions of nodes when there are degeneracy of eigenvalues (please refer to Berglund for details). At this juncture, it is appropriate to mention the related concepts of basins of attraction which appear in chaotic dynamics literature. Particular one has the concept of strange attractor, arising from the fact that while  is assumed continuous, the vector field may be singular at some points and thus giving rise to space filling structures known as fractals (see this article).

is assumed continuous, the vector field may be singular at some points and thus giving rise to space filling structures known as fractals (see this article).

To proceed beyond the linear case, one needs extra tools namely the Lyapunov functions (often in the form of quadratic forms) that set up level curves over which phase space trajectories can approach or cross and help characterise stability of equilibrium points in general. The Lyapunov functions are those functions  such that

such that

for

for  in the neighbourhood of

in the neighbourhood of  ;

;- its derivative along orbits

is negative showing

is negative showing  is stable.

is stable.

To illustrate this, we borrow again a diagram of Berglund to show how phase space orbits approach or cross the level curves of  .

.

Cases (a) and (b) are respectively the stable and asymptotically stable equilibrium points where trajectories cut the level curves in direction opposite to their normals. Case (c) is the case the unstable equilibrium point generalizing the linear case where orbits may approach the point in one region and moves away in another region. Such orbits are called hyperbolic flows. This is essentially the case of interest. Note in particular if one reverses the arrows, there is invariance of the two separate regions of stable and unstable spaces and the special status of hyperbolicity.

One can now state a result known in the literature i.e. given a hyperbolic flow  on some

on some  , a neigbourhood of hyperbolic equilibrium point

, a neigbourhood of hyperbolic equilibrium point  , there exists local stable and unstable manifolds

, there exists local stable and unstable manifolds

;

;

.

.

For further technical details, consult Araujo & Viana, “Hyperbolic Dynamical Systems” (arXiv:0804.3192 [math.DS]) and Dyatlov, “Notes on Hyperbolic Dynamics” (arXiv:1805.11660 [math.DS]).

Examples for which hyperbolic flows are known are the cases of geodesic flows on negatively curved (hyperbolic) surfaces and billiard balls in Euclidean domains with concave boundaries (see Dyatlov). Hyperbolicity then becomes a paradigm for structurally stable ergodic system as discussed by Smale in 1960s (see Smale, “Differentiable Dynamical Systems“, Bull. Amer. Math. Soc. 73 (1967) 747-817). While this is so, unknown to the mathematicians then, E. Lorenz discovered a dynamical system that was neither hyperbolic nor structurally stable (see Lorenz, “Deterministic Nonperiodic Flow“, J. Atmosph. Sci. 20 (1963) 130-141). A new paradigm is needed to account for such systems. However, we will defer this discussion to a future post.